If you google for examples about uploading files in JSF with Primefaces, you will find a lot of pages indicating how to do that. Unfortunately, almost all of them lack some crucial details.

In this post, we will see some important points about uploading files with Primefaces. And I will assume you are able to implement a simple file upload.

PS: please refer to the many available resources on how to implement a simple file upload. You can use this link.

Now you can try to upload files with special characters and check that everything works fine.

And of course don't forget the FileCleanerCleanup listener to your web.xml so it stops created threads:

Unfortunately, that didn't work for me under Tomcat 7. And may be you will face the same issue, or even, you want to ensure that this deletion is done at an earlier time, thus preserving system resources.

The best solution that I found to manually delete these temp files, was to add a new method to the org.primefaces.model.UploadedFile to return the corresponding instance of org.apache.commons.fileupload.FileItem and then delete it calling its delete() method.

You don't need to get the Primefaces source code and to build it manually, but just create a org.apache.commons.fileupload and add the mentioned classes there. The classloader will load your classes instead of those coming with Primefaces.

And that's the best solution regarding speed and performance that I can think of by now.

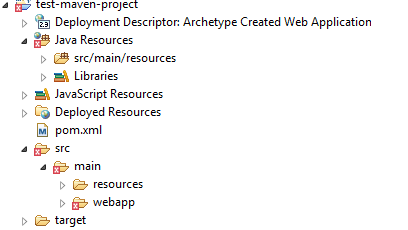

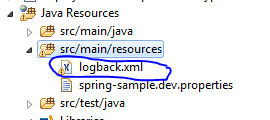

The source code of this tutorial is available on Github.

I will be happy for any feedback

In this post, we will see some important points about uploading files with Primefaces. And I will assume you are able to implement a simple file upload.

PS: please refer to the many available resources on how to implement a simple file upload. You can use this link.

1. Choosing the right "thresholdSize" value

During the upload operation, uploaded files are either kept in server memory or in temporary files, until you finish processing them.

The decision is made based on the file size compared to a property called sizeThreshold ("The threshold above which uploads will be stored on disk", see DiskFileItemFactory for details).

You can specify the value of sizeThreshold in web.xml as an init-param to the Primefaces fileupload filter (like in the above mentioned article). However you must chose the right value, based on your application usage. If you define a large value (10Mb for example), and there are 100 users that can access your application to upload files in the same time, than you will need up to 1Gb of memory just to handle file uploads. Otherwise, you will encounter OutOfMemory errors, and you will need to increase the max heap memory every time the number of users is growing.

2. Make sure your file names are correctly processed

If you store your files after the upload like in the mentioned example, and then try to upload a file name with a name containing some French characters like (à, é etc...) or even some Arabic names, you will get some weird results like in the following picture:

The first file, had for name: ààààà.txt. The second had an Arabic name.

The solution will be to decode the filename using the UTF8 charset, here is how to do it:

public void handleFileUpload(FileUploadEvent event) {

//import org.apache.commons.io.FilenameUtils; to use it

//In most cases FileUploadEvent#getFile()#getFileName() returns the base file name,

//without path information. However, some clients, such as

//the Opera browser, do include path information

//From the FileItem class docs

String fileName = FilenameUtils.getName(event.getFile().getFileName());

try {

fileName = new String(fileName.getBytes(Charset.defaultCharset()), "UTF-8");

} catch (UnsupportedEncodingException e1) {

LOGGER.error("Error in charset:",e1);

}

}

Now you can try to upload files with special characters and check that everything works fine.

3. Make sure the temporary files are deleted

As mentioned in the FileUpload docs, when using DiskFileItem to upload files (which means you are writing uploaded files to temp ones on the disk), a resource cleanup should be applied. To do so (refer to the paragraph Resource cleanup) "an instance of org.apache.commons.io.FileCleaningTracker must be used when creating a org.apache.commons.fileupload.disk.DiskFileItemFactory".

Unfortunately, this is not implemented in the Primefaces FileUploadFilter (see this issue). So until some future releases come with a fix, you need to define a new filter extending from FileUploadFilter and change the doFilter to the following:

public void doFilter(ServletRequest request, ServletResponse response, FilterChain filterChain) throws IOException, ServletException {

if(bypass) {

filterChain.doFilter(request, response);

return;

}

HttpServletRequest httpServletRequest = (HttpServletRequest) request;

boolean isMultipart = ServletFileUpload.isMultipartContent(httpServletRequest);

if(isMultipart) {

logger.debug("Parsing file upload request");

//This line is added

FileCleaningTracker fileCleaningTracker = FileCleanerCleanup.getFileCleaningTracker(request.getServletContext());

DiskFileItemFactory diskFileItemFactory = new DiskFileItemFactory();

//This line is added

diskFileItemFactory.setFileCleaningTracker(fileCleaningTracker);

if(thresholdSize != null) {

diskFileItemFactory.setSizeThreshold(Integer.valueOf(thresholdSize));

}

if(uploadDir != null) {

diskFileItemFactory.setRepository(new File(uploadDir));

}

ServletFileUpload servletFileUpload = new ServletFileUpload(diskFileItemFactory);

MultipartRequest multipartRequest = new MultipartRequest(httpServletRequest, servletFileUpload);

logger.debug("File upload request parsed succesfully, continuing with filter chain with a wrapped multipart request");

filterChain.doFilter(multipartRequest, response);

}

else {

filterChain.doFilter(request, response);

}

}

<listener> <listener-class> org.apache.commons.fileupload.servlet.FileCleanerCleanup </listener-class> </listener>Now according to FileUpload docs, temps files will be deleted automatically when the corresponding java.io.File instance is no longer used and garbage collected.

Unfortunately, that didn't work for me under Tomcat 7. And may be you will face the same issue, or even, you want to ensure that this deletion is done at an earlier time, thus preserving system resources.

The best solution that I found to manually delete these temp files, was to add a new method to the org.primefaces.model.UploadedFile to return the corresponding instance of org.apache.commons.fileupload.FileItem and then delete it calling its delete() method.

You don't need to get the Primefaces source code and to build it manually, but just create a org.apache.commons.fileupload and add the mentioned classes there. The classloader will load your classes instead of those coming with Primefaces.

4. Make sure you are copying files efficiently

In many examples I saw people saving files by calling the getContents() of the UploadedFile instance. This is really a bad practice since it loads whole content of the file into the server memory. Imagine you are writing 1000 files of 10Mb size each one simultaneously!

So, unless you are sure your files size are really small, never call that method. Instead you should use the getInputstream one like in the above mentioned article. And even, there is a fatser way to copy content of the file using the Java NIO API (the source of this solution is here):

private void fastFileCopy(UploadedFile file, String filePath){

try {

final InputStream input = file.getInputstream();

final OutputStream output = new FileOutputStream(filePath);

CustomFileUtils.writeStream(input, output);

//And call the delete method here:

file.getFileItem().delete();

} catch (IOException e) {

LOGGER.error("Error upload,", e);

}

}

public static void copyStream(final InputStream input, final OutputStream output)

throws IOException {

final ReadableByteChannel inputChannel = Channels.newChannel(input);

final WritableByteChannel outputChannel = Channels.newChannel(output);

// copy the channels

final ByteBuffer buffer = ByteBuffer.allocateDirect(16 * 1024);

while (inputChannel.read(buffer) != -1) {

// prepare the buffer to be drained

buffer.flip();

// write to the channel, may block

outputChannel.write(buffer);

// If partial transfer, shift remainder down

// If buffer is empty, same as doing clear()

buffer.compact();

}

// EOF will leave buffer in fill state

buffer.flip();

// make sure the buffer is fully drained.

while (buffer.hasRemaining()) {

outputChannel.write(buffer);

}

// closing the channels

inputChannel.close();

outputChannel.close();

}

And that's the best solution regarding speed and performance that I can think of by now.

I will be happy for any feedback